After plenty of hours of studying and labbing the wide ranging topics on the JNCIE-DC blueprint, I took the JNCIE-DC lab exam and passed! I can proudly say I’m JNCIE-DC #389. In this conclusion of the previous JNCIE-DC blogs about my lab setup and about the remote lab environment, I will talk about my experience of taking the lab and how I’ve prepared.

Resources

As I’m working with different cloud provider customers in my daily job it really helped in preparing for this test. Being exposed to the platforms and technologies used gave me a head start, but after really starting to lab the different topics I really felt I had a long way to go. I was also a champion in delaying my actual start of preparation. After committing to the goal of passing the test in Q1 of 2021 I really picked up the pace and started studying multiple nights a week and came into the flow I like to be when preparing for a lab exam.

JNCIE-DC self study workbook

The Juniper Education self-study workbook for the JNCIE-DC lab is by far my most used resource for the lab. This will prepare you for 95% of the test. Note that it does not cover 100% of the topics and you will need to read documentation and have a deep understanding of the technologies on the blueprint of the test.

Examples of this are working with Class of Service configuration on QFX5100 platforms, as the lab only works on vQFX devices which are based on a virtual Q5 PFE rather than a Trident2 chip as used in the QFX5100. This means you really have to be aware of the physical limitations of the platform when applying Class of Service configuration.

The same goes for Virtual Chassis Fabric. Again a feature only supported on QFX5100 (and QFX5110/EX4300), not on the vQFX.

When working through the labs of the workbook you will find that some solutions will not have much explanation other than the correct configuration. Sometimes this made me doubt my own solution of which I was sure was correct as well. Then it really helps by taking the documentation and checking it by yourself to know if your variant is just as right as the configuration in the workbook is.

EVE-NG

My Dell R730 server running bare-metal EVE-NG Pro was a life saver during the preparation. The performance was amazing and I could spin up as much virtual resources as I wanted to test various topologies.

Juniper Documentation

I did not read books as a preparation, but you do need a solid understanding of routing protocols, MPLS and EVPN. To brush up on all the knobs and features I read parts of the Juniper documentation which has excellent sample configurations explaining what’s needed and why.

Taking the lab

As I took the lab in March of 2021. The Juniper offices are closed because of COVID-19 and the lab exam is conducted remotely. I’m fortunate to have a dedicated home office room that I could close and be isolated, as is required according to the lab policy. For all other requirements that your PC and room have to comply with can be found in my previous blog about that.

First be prepared that the JNCIE-DC lab in 2021 is still an 8 hour exam and not a 6 hour exam that other tracks already are.

Early morning on the day of your lab, you will receive an e-mail with an attachment for the Secure Exam Browser. This is a kiosk application that ensures a fully isolated environment where you will access the lab task document, documentation and a remote desktop environment with access to the equipment. Note that you are not able to print the tasks or diagrams of the lab so if you have a widescreen or a dual monitor setup that is a big plus! On my ultrawide monitor I could fit the topology diagram, tasks and remote desktop with notepad and securecrt open next to each other.

You will join a Zoom call where you will need to have (and keep) your webcam enabled as well as your microphone (do not go on mute). On the time mentioned in the e-mail your proctor will join the Zoom session and put you in a separate room where only you and the proctor are in. You will see the proctor jump in and out of your room at random times to check on what you are doing via your webcam, audio and the screenshare that is constantly running.

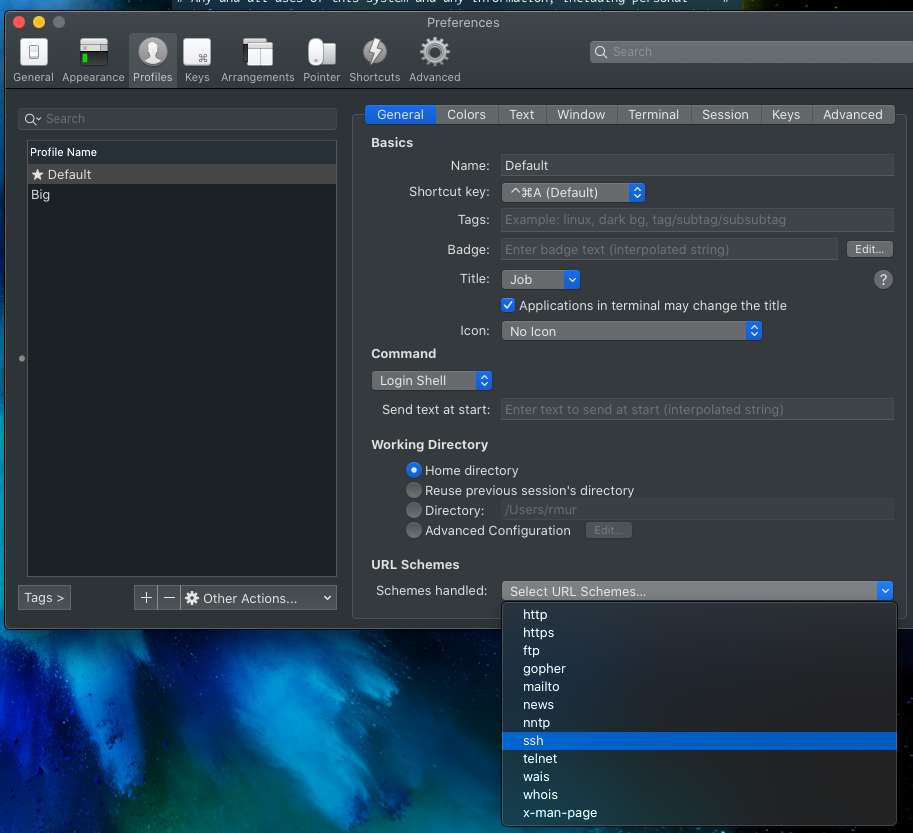

To access the devices you will open SecureCRT and see sessions to all devices both over out of band management (SSH) and over Console. The sessions will even automatically login so Juniper makes your life easy there. I did have to get used to some latency on the sessions as it felt like the remote desktop was hosted in the US and I’m based in Europe, so having a 150ms-ish latency on the connection does need some adjusting when you are typing fast.

If you want a break to grab a drink or go to the toilet, raise your hand on the Zoom call to attract the attention of the proctor and ask for permission before leaving your desk. The official time for lunch is 1 hour, but you are not allowed to be away for that time of course. The proctor will ask you to eat your lunch in front of the camera at your desk and you can take some time to rest. My proctor was kind enough to allow me to get back to work after about 20 minutes and gave me my official time to finish the exam.

Time management

Managing your time is an absolutely crucial part of taking a lab exam. During my preparation I really struggled initially to finish a decent sized lab on time, but after pushing hard on speed and concentrating I was able to finish the super labs in the workbook in under 4 hours. When I take the lab I’m always very aware of the time I spend on each section or part of the test. I keep track of that by putting timestamps in a text file and which part I finished at that time. The way I like to work is go trough the tasks as quick as I can and finish them so I have a lot of time left for reviews.

When I returned to work after lunch I was about 80% done with the tasks and could finish all of them in about 30 minutes so I had plenty of time left. Now I have to admit when I do these lab exams, my typing speed has increased a lot over my normal speed as I’m trained to work on these configurations for hours. With over 3 hours left on the clock I went back to the very first task and I try to read it like I have never read it before to try and trick myself into coming up with the solution again. Overall I made 2 small changes to the configuration that I don’t know if they made an impact, but to me they felt like the better answer to the task.

With about 30 minutes left I felt like I checked everything twice and did a calculation on how many points I was certain of and how many I had some kind of doubt about. The passing score is not known, but I assumed it’s around 78-80%. With having more then enough points based on my own verification I told the proctor I was finished.

Conclusion

The official waiting period is ‘up to 5 working days’. For my result to come in it actually took those 5 working days so a full week after my lab attempt I received the e-mail that I passed!

This JNCIE-DC exam is definitely a tough one and I feel lucky I was able to pass this one at first attempt. The complexity lies mainly in the fact that everything builds on top of each other and a mistake in one of the layers impacts reachability across pretty much the entire exam. It’s absolutely crucial to be very exact on details of the tasks, but also to not overthink them! Do exactly as the task says within the constraints you are free to choose the solution you want. The exam is not there to trick you, but just to test you on you being an expert!