As explained in my previous post on my home servers, I have a bare metal system deployed with EVE-NG Pro installed. As I’m (slowly) preparing for the JNCIE-DC certification I wanted to share the topology that I’m using.

As the hardware required to study for the JNCIE-DC is quite significant, it makes a lot of sense to try and virtualise most of these resources. Unfortunately not the entire blueprint can be tested with virtual appliances, but we can get a very long way. Some (or a lot of) experience with actual Juniper QFX and MX products is very useful in your preparation for the JNCIE-DC.

Juniper offers a very good self-study product for the JNCIE-DC. This self study workbook contains a number of chapters with in-depth tasks on a certain topic of the lab blueprint and also contains 2 full labs that are very similar in complexity to the real JNCIE-DC lab test.

At the time I’m writing this, there are (as far as I know) no options to rent a rack of physical hardware to prepare for the exam, because the self-study workbook offered by Juniper Education Services also uses a virtual lab topology based on the vMX and vQFX products.

The lab topology used in that self-study workbook is used in my topology as well, so you are able to do all labs in that workbook on this topology as the same virtual appliances are used.

JNCIE-DC Lab Device Blueprint

Let’s first take a look at the blueprint of the JNCIE-DC lab exam to know what topics are covered and what type of devices are used when you take the exam.

An important prerequisite is that you need a valid JNCIP-DC certificate to be able to schedule a lab date with Juniper.

The lab exam consists of a number of the following devices:

- QFX5100 running Junos 14.1

- MX80 running Junos 17.1

- vMX running Junos 15.1

- vSRX running Junos 12.1

Feature wise there should not be much difference between the MX80 and vMX devices as the Packet Forwarding Engine (PFE) is also virtualized in the vMX. They do run a different version of Junos, so that could be a thing to be aware of. Regarding features or maybe configuration that may have changed between these versions.

At time of this writing in 2020, the Junos versions running in the lab are quite old. They are still up to date as I’m not aware of any annoucement of an update to the blueprint or the versions in the lab. The versions are even that old that most of them are not available anymore to download from the Juniper website.

You can also clearly see that the lab consists of a combination of physical devices and virtual devices. Again, when configuring the tasks you will not (or should not) feel any difference in the handling and operation of the devices.

Juniper lab exams are also known to have extra devices in the topology that you will not have access to and do not have to configure, but where you will need to interface with and setup some form of connectivity with. They will act as external routers (like an Internet connection) or a remote site to interconnect with. So be prepared to know how to check connectivity issues from one side (like how to troubleshoot BGP adjacency issues for example from the local router, without knowing the configuration of the other side).

I would expect the devices in the lab to also have an initial configuration already present. You may even have to troubleshoot this as there could be a mistake in there on purpose.

In this post I will not cover the task blueprint as the Juniper website has a very detailed overview of the possible tasks and topics that are covered in the test.

JNCIE-DC lab in EVE-NG

The tasks of the self-study workbook are all based on virtual appliances, as mentioned before. We will use a multiple of vMX and full vQFX devices. The vQFX comes in 2 flavours: Lite and Full. The lite version only contains a routing-engine, which enables you to test routing features. For anything else than layer 3 routing (to test IP fabrics for example), you will need the full version which adds a second VM that runs a virtualised version of the Q5 chipset. These 2 VMs combined (just like with the vMX), run a full version of a QFX10000 type switch. Unfortunately this is not a QFX5100 based switch (which is based on Broadcom Trident 2 silicon). The main difference in configuration between the QFX10k (with Juniper silicon) and the QFX5100 is how layer 2 bridge domains and therefore EVPN configuration is handled, so before taking the test be aware of the limitations the QFX5100 has (hint: one ‘virtual’ switch vs multiple).

To be able to run the full topology that the workbook uses you will need:

- 6 vMX routers

- 6 vQFX switches

- 1 vSRX firewall

- 1 Junos Space VM

- 1 Linux VM

As both the vMX and vQFX require 2 VMs per device, it means we will need a total of 27 virtual machines to run the full topology. As you can imagine, this will consume quite a lot of resources on your system. I would recommend getting at least an 8 core CPU and 64GB RAM. The RAM requirement can be a bit lower as EVE-NG has excellent memory dedub features. The CPU core count is really the more the better. If you are able to run this lab running for a long time it’s not that big of a deal. Especially when booting everything up, a higher CPU core count is very welcome.

There is one important item that cannot be tested on the virtual appliances and that is Virtual Chassis Fabric, which is not supported on the QFX10000. The commands involved to get this up and running are not that much and the workbook offers a good explanation of it that should be enough for the lab exam.

JNCIE-DC Lab Topology

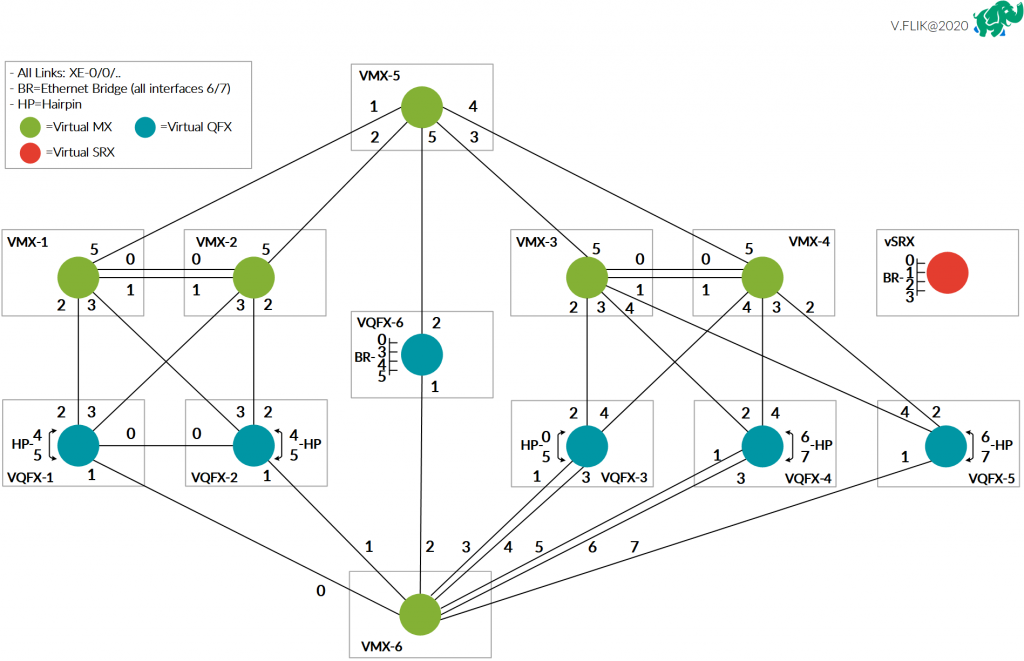

There are quite a number of connections to take care of. My good friend Valentijn Flik made a diagram of the topology as my versions turned out more like spider webs.

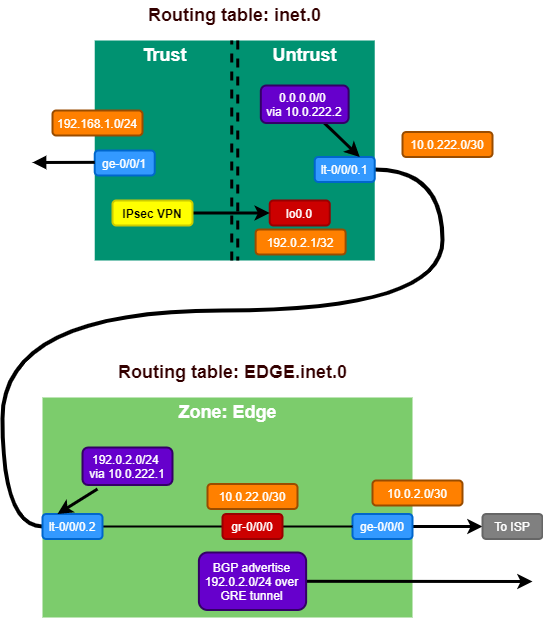

The main topology is seen as being 2 datacenter sites. One contains 2 vMX routers as spines and 2 vQFX switches as leafs. The second contains 2 vMX routers and 3 vQFX switches in a more typical CLOS fabric wiring. All devices have connections to either vMX5 or vMX6 for ‘backbone’ or external connectivity testing. The top vMX5 will be used more as peering or external connection router and the bottom vMX6 is more used as a simulation of network hosts in the setup.

Some devices have connections that loop back into the same device. These are called hairpin connections.

All devices have ports 6 and 7 also connected to an Ethernet Bridge. Using this bridge segment the lab tasks make use of 802.1Q or VLAN tags to simulate connections between devices that are not actually there. You do not need to make this on your own, as the initial configuration provided by the workbook will take care of this when you load up the configs for a certain task. The bridge in EVE-NG that I used is just a standard bridge network, which should be sufficient for all tasks.

I have also connected all devices out of band management interface to a virtual bridge that connects to my home LAN network so I can reach all devices over their OOB IP address.

Software

There are a number of software images involved with this set-up. The virtual appliances are available for download on the Juniper website. The best way to find them is via the trial options that are available:

As far as Junos versions are considered, I use very recent versions of the appliances, actually the newest at time of this writing. I do not expect behavior to be very different between the releases running in our lab versus the actual lab exam.

You will also want a Junos Space VM if you really want to test the entire blueprint, but you will need a Juniper account with software download access to get access to that. Fortunately the self-study workbook also comes with a number of vouchers to use the Juniper provided virtual environment, which should be more than enough exposure to Junos Space to be able to understand it fully.

As for licensing, the vMX has a default bandwidth limit of 1Mbps, which is more than enough for the lab testing. On the vQFX I’m not aware of any licensing limitations, only that the platform is only capable of a very low packets per second performance, so again perfect for testing! Finally the vSRX does have a time constraint without having a license installed. The system will not work anymore after 60 days, but this is easily reset by deleting and re-adding the device to your topology and as all tasks start with a fresh or initial setup, there is no problem in deleting and adding the device again. You will not lose work as I would recommend saving your lab configs after you finished the task, as it’s a great resource to check back on during your studies.

Using the lab

You can download my EVE-NG lab here, be aware that you will need to make your own device definitions first based on software images downloaded earlier.

I would suggest following the documentation on the EVE-NG website to setup the appliances:

After creating the Juniper virtual appliance templates in EVE-NG, it’s time to import or create the JNCIE-DC lap topology. I would recommend creating it yourself as you immediately learn a lot about which connections run where and it sets you up learinng the topology quicker.

Then the last part that’s required are the JNCIE-DC self-study workbook initial configurations. As the book and it’s resources are copyrighted I cannot share them here, but I downloaded them from the Juniper virtual environment. After purchasing the workbook you get a number of vouchers that allow you to schedule for a full day of lab access. If you log on to the Linux virtual machine, there is a folder with all workbook configurations on the drive. That VM should have access to the Internet, so you can store a zip file of that folder it on any file sharing website for use within your lab setup.

Happy Labbing

Now when all VMs are booted, you can dig into the workbook and load initial configurations of each chapter and start labbing! Feel free to use the lab topology diagram and/or the EVE-NG lab template for your own use and adjust for your own preferences when desired!

If you have any questions regarding the JNCIE-DC lab, running it on EVE-NG or have any JNCIE-DC study questions in general. Feel free to reach out below in the comments or via Twitter!