One of the latest features on the Juniper MX-series devices is the BRAS functionality. The first functionality (automatically configuring interfaces) has been available since a long time, but most BRAS features have been introduced last year in JUNOS 11.x releases. With JUNOS 11.4 (also a Long-Term-Support release) the features matured as all major components are now available and (fingers crossed) stable.

This functionality can be named in different ways. BRAS or Broadband Remote Access Server is the most common name. Other names are Broadband Network Gateway (BNG) or Broadband Service Router (BSR).

This functionality is used in Internet Service Provider environments usually where DSL or Cable is used as the last mile access.

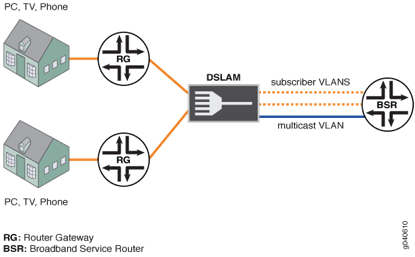

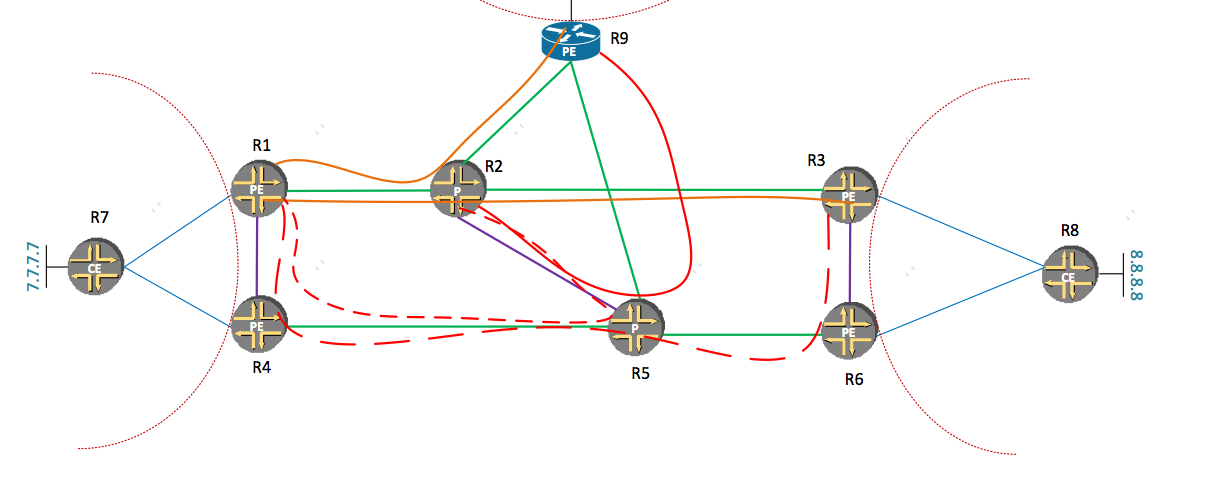

The following drawing demonstrates how the end-to-end path looks and where a BRAS/BSR is placed.

The CPE (DSL/Cable modem) is connected to the Multi-Service Access Node (MSAN), this MSAN is either a DSLAM in case of DSL networks or a CMTS in case of Cable networks. The DSLAM and CMTS devices convert the signal to Ethernet (or any other transport) and forward it to the rest of the network. This connection is then terminated on a BRAS device before it enters the rest of the network (and the internet).

The BRAS is used for 2 reasons. The first is for authenticating the client if it has the right to enter the network. Second is to enforce the subscription in terms of bandwidth limits and services that the client bought.

In the more classical model, when ATM was mostly used as transport layer, the identification of subscribers (as how clients are called on BRAS devices) where identified using PPP sessions. A client or CPE device initiates a PPP session. This ensures for encapsulation between client and BRAS and ensures some sort of circuit where you can apply authentication and enforce traffic control polices. Authentication of the client is very easy from the service provider standpoint, as a user has a username and password, which it needs to enter before getting authorized to the network. This is a little more hassle for the user as they need to know these values and have knowledge how to configure a ppp session, either on the CPE (modem) or on end-hosts.

The more modern/current approach to BRAS deployments is first of all using Ethernet as the transport layer for the usual reasons that Ethernet is very cheap and offers a lot of flexibility and now with the OAM features as 802.1ag it’s becoming very mature to use as carrier transport layer. Together with using Ethernet more flexible options become available as Ethernet utilizes the DHCP protocol for address assignments. This enables a very dynamic approach to enabling users on the network, but requires some administration by the ISP.

Traffic separation on Ethernet is ensured using IEEE 802.1Q based VLAN tags. This is done in 2 ways. Either using a single VLAN (per PoP or per service), which is called the S-VLAN model, or by using a separate VLAN for every customer (C-VLAN). In the C-VLAN model there are usually 2 tags stacked on each other as 4000 VLAN numbers is not enough for service provider scaling, so an additional tag is stacked which gives 4000×4000=16.000.000 combinations. Which should be more than enough for a single interface. This means that these models are not strictly compliant to any MEF or IEEE standard. It’s just terminology used in the BNG deployments.

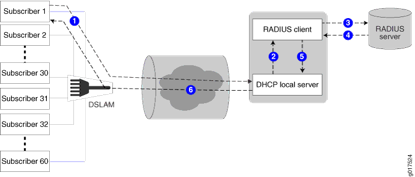

The “Life of a packet” in the DHCP BRAS model:

- CPE (modem / settopbox) is shipped to the client,

- CPE MAC addresses are registered with back office systems of the ISP.

- When installed the CPE issues a DHCP Offer message towards the network

- The packet is tagged with one or more VLAN (802.1Q) tags by the MSAN

- The tagged packet is received by the BRAS and depending on the VLAN tag combination a sub-interface (unit / IFL) is created dynamically according to pre-defined variables.

- In case of the S-VLAN model, there are still multiple subscribers sharing the same sub-interface, which limits the possibilities for configuration. Another sub-interface per subscriber is necessary. This will be based on the source IP address. This process is called ‘demux’ and uses the virtual demux0 interface within JUNOS. Within this process another sub-interface is created on top of the demux0 interface, which now ensures enough uniqueness.

- After the customer uniqueness is ensured the BRAS picks up the DHCP message and processes all possible options (within option 60 or 82, several properties can be set on which the MX can act).

- Next step is to send a request to the AAA server. The username that is used can be based on DHCP options or MAC address, or any custom keyword

- After authentication the AAA server responds with several attributes that fill in the variables of the configuration of the sub-interface.

- Finally a DHCP server is requested to hand out an IP address (can be local on the MX or remote through DHCP relay)

- Then finally everything comes together and the IP address is bound to the newly created sub-interface along with all properties as described in the profile and the variables that are sent with RADIUS attributes

- After the sub-interface is created the DHCP process is finalized using a DHCP Offer, Request, Accept and the client can access the network!

This was to give you a brief introduction into the BRAS functionality now with the widely deployed DHCP model. The main functionality that is now available to enable all this on the MX is the auto-configuration of sub-interfaces and the use of variables that can be filled in using RADIUS attributes.

During JUNOS 11.x releases the functionality matures and important things like GRES (supporting routing engine fail-overs) and versioning (changing profile configuration while subscribers are using that profile) became available and as of JUNOS 11.4 all major features are implemented.

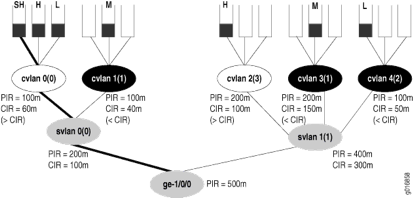

Please be aware of the platform that you choose to run the BRAS functionality on. As all the auto-configuration is performed on the routing-engine a fast RE is recommended! The new quad-core (RE-S-1800×4) routing-engine delivers blazing fast performance and enormous scaling in terms of IFLs (units / logical interfaces). When you want to deliver correct Class of Service for thousands of subscribers using a model for having various queues ensuring correct prioritization of voice/video traffic and shaping according to the bandwidth plan the customer bought you will need a feature called H-QoS (H for Hierarchical).

The per VLAN/subscriber scheduling and shaping is only available on the Q or EQ line cards on the MX platform. If you only want to use VLAN policing than you are good with a standard Trio/Cassis-based line card.

Within this model, you assume no control over the MSAN (CMTS or DSLAM), so to control the uplink bandwidth of the user you need input shapers to slow down the incoming traffic. With the Q and EQ linecards this is also possible as the queues can be distributed across both input and output traffic. To ensure correct scheduling for voice and video traffic the BRAS expects traffic to be marked with the correct DSCP and/or IP Precedence bits.

I hope you enjoyed my blog, please leave a comment if you have questions.