EVPN or Ethernet VPN is a new standard that has finally been given an RFC number. Many vendors are already working on implementing this standard since the early draft versions and even before that Juniper already used the same technology in it’s Qfabric product. RFC 7432 was previously known as: draft-ietf-l2vpn-evpn.

The day I started at Juniper I saw the power of the EVPN technology which was already released in the MX and EX9200 product lines. I enabled the first customers in my region (Netherlands) to use it in their production environment.

EVPN is initially targeted as Data Center Interconnect technology, but is now deployed within Data Center Fabric networks as well to use within a DC. In this blog I will explain why to use it, how the features work and finally which Juniper products support it.

Why?

Data Center interconnects have historically been difficult to create, because of the nature of Layer 2 traffic and the limited capabilities to control and steer the traffic. When I have to interconnect a Data Center today I have a few options that often don’t scale well or are proprietary. Some examples:

- Dark Fiber

- xWDM circuit

- L2 service from a Service Provider

- VPLS

- G.8032

- Cisco OTV or other proprietary solutions

Most options also only work well in a point to point configuration (2 data centers) like Dark Fiber or xWDM circuits or only with a few locations/sites (VPLS).

Only the proprietary solutions have a control-plane that controls the learning and distribution of MAC addresses. All others are technically just really long Ethernet cables that interconnect multiple Data Centers.

Now why is that so dangerous?

I’ve had numerous customers with complete Data Center outages because of this fact. No matter which solution you pick that does not offer a control-plane for the MAC learning, when problems occur in one DC like ARP flooding or other traffic floods. They will propagated to the other DC as well using the interconnection layer that can only protect it with features like storm-control, etc. This means that impact on Layer 2 in DC1 impacts other Data Centers. Where in most cases the other Data Center is in place because of high availability reasons.

EVPN will solve this!

How?

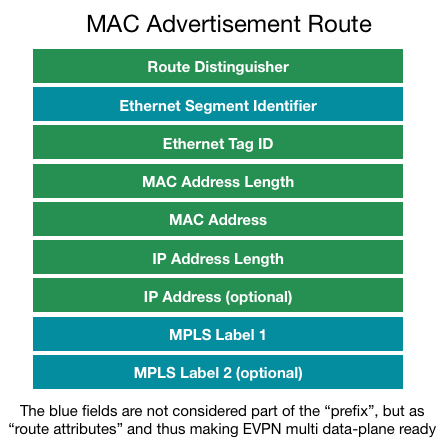

EVPN is technically just another address family in Multi Protocol (MP) BGP. This new address family allows MAC addresses to be treated as routes in the BGP table. The entry can contain just a MAC address or an IP address + MAC address (ARP entry). This can all be combined with or without a VLAN tag as well. The format of this advertisement is shown in the drawing.

BGP benefits

Now the immediate benefit of using BGP for this case, is that we know how well the BGP protocol scales. We won’t have any problems learning hundreds of thousands of MAC entries in the BGP table.

Route L2 traffic – All-Active Forwarding

The second benefit is that we now have MAC addresses as routing entries in our routing table and we can make forwarding decisions based on that. This means we can use multiple active paths between data centers and don’t have to block all but 1 link (which is the case in all the previously mentioned DCI technologies). The only traffic that is limited to 1 link is so-called BUM traffic (Broadcast, Unknown unicast and Multicast). For BUM traffic a Designated Forwarder (DF) is assigned per EVPN instance. This technology is not new and is also found in protocols related to TRILL and other proprietary technologies.

ARP and Unknown Unicast

ARP traffic will be treated in a different way than regular Layer 2 interconnects will do. First of all Proxy-ARP is enabled when EVPN is enabled on an interface. The Edge gateway of the DC will respond to all ARP requests when it knows the answer to them. This immediately implies a very important rule. When an ARP request is done to an IP address the Edge router doesn’t know or when traffic is received with a Destination MAC that is unknown, the Edge gateway will drop this traffic! This limits unknown unicast flooding immediately to only a single DC, which is quite often a cause of issues. The Edge Gateway has to learn about a MAC or ARP entry from the Edge gateway in the other DC before it will allow traffic to pass.

Multi-homing

We obviously want 2 gateway devices at the edge of our Data Center. Therefore we have to support a multi-homing scenario. EVPN allows for an all-active multi-homing scenario as previously explained, because of the routing nature. Besides this we need to limit the flooding of traffic, which is the reason why we choose a Designated Forwarder per instance which is responsible for receiving and sending BUM traffic. We also support split-horizon, to prevent that traffic originating from our own Data Center does not get back in through the other Edge Gateway. This is done using the ESI field in the advertisement. An ESI is an identification for a certain “Data Center Site”. Both gateways in a single Data Center should use the same ESI number, to prevent traffic getting looped back in. Other Data Centers should use a different ESI number. This means that each Data Center will have 1 ESI number.

MAC Mobility

The EVPN feature is designed for extremely fast convergence. Another big issue within Data Center is what’s called MAC flapping and mobility. This means that when a Virtual Machine moves with it’s MAC address, the MAC needs to be re-learned within the network and the old entry should be deleted. Within a single layer 2 switch domain this happens quite fast, which is also the cause of issues, when a duplicate MAC address is found in the network. Then the same entry is learnt on two different ports causing “MAC Flapping”. EVPN solves this by introducing a counter when a MAC moves between Data Center locations. When this happens too often in a certain time period (default 5 times in 180 seconds) the MAC will be suppressed until a retry timer expires to try again. The benefit of the MAC sequence numbering is that when the Edge Gateways see an advertisement for a MAC address with a higher sequence number they will immediately withdraw the older entry, which benefits convergence time after a VM move.

Layer 3 All-Active Gateway

The last benefit I want to highlight is that, because the Edge Gateway is fully aware where hosts are located in the network, it can make the best Layer 3 forwarding decision as well. This means that Default Gateways are active on all edge gateways in all data centers. The routing decision can be taken on Layer 2 or on Layer 3, because the edge gateway is fully aware of ARP entries .

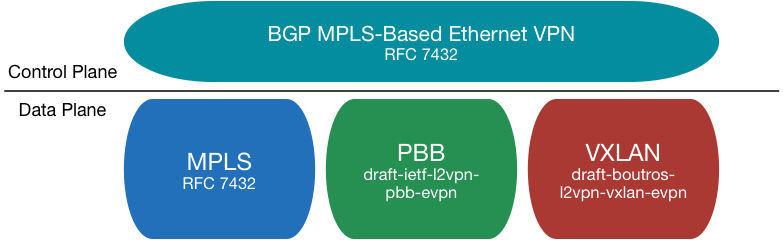

Data Planes

Currently there is 1 standard and 2 proposals that all rely on the same control-plane technology (EVPN). The first is the current standard RFC7432 which is EVPN with MPLS as it’s data-plane. The second proposal is EVPN-VXLAN, where the same control-plane is used, but the data-plane is now either VXLAN or another Overlay technology. The third proposal is to use PBB encapsulation over an EVPN control-plane with MPLS as data-plane.

What?

Finally I want to discuss which products support this technology and how you can implement it. Juniper currently implements RFC 7432 (EVPN-MPLS) on it’s MX product line, ranging from MX5 to MX2020 and on the EX9200 series switches. The EVPN-MPLS feature is designed to be used as Data Center Interconnect.

Since the MX has full programmable chips Juniper also implements several overlay technologies like VXLAN. This means we can stitch a VXLAN network, Ethernet bridge domains, L2 pseudo wires and L3 VPN (IRB interface) all together in a single EVPN instance.

Summary

All this means we have a swiss army knife full of tools to use within our Data Center and we can interconnect various network overlays and multiple data centers together using a single abstraction layer called: EVPN!

In my next blog I will demonstrate the use of EVPN on the MX platform and how easy it is to implement an optimal Data Center Interconnect!

Nice post! What’s your opinion on EVPN vs PBB-EVPN. Without PBB there could be scalability concerns depending on the number of MAC addresses.

What I’ve noticed from the Cisco implementation is that it’s a bit tricky to troubleshoot it but that may be because we’re not very used to the protocol yet.

My opinion is that I don’t see the use case for PBB-EVPN. The whole goal of EVPN is to have that control-plane for Layer 2 traffic and make intelligent forwarding decisions based on the MAC entries in the routing/forwarding table. With PBB you abstract that away.

Scalability shouldn’t be an issue on today’s platforms anymore. We can scale easily to hundreds of thousands of MAC addresses and IP routes and keep the protocol still converging fast (look at the Internet Routing table).

Good post, thank you. I have a different opinion about PBB-EVPN though. It is a simplified version of EVPN very useful when you need to reduce the control plane overhead and still have single-active/all-active MH. By the way, you may want to correct the reference to the draft for EVPN for VXLAN. It is not draft-boutros but draft-ietf-bess-evpn-overlay…

Good write up explaining the benefits!

Yes, I just got it running in my lab with 4 units of asr9k and n9k as CEs with all active load sharing

Hi Chris,

Did you use PBB-EVPN or EVPN-MPLS in your lab testing?

Just wondering if cisco have implemented RFC 7432 (EVPN-MPLS)?

Thanks

As far as I know Cisco has not implemented RFC7432, which still amazes me, as I’d be using that for any DCI deployment.

Thanks for confirming, Rick.

Nice post,

Though the question remains when we may finaly see support for PBB-EVPN on MX platform. As with pure EVPN we are back in Y2k where we had to scale FIB by adding more PEs to the POP and creating separate RR planes to scale the control plane.

adam

I can’t comment on features that may come in the MX platform, but FIB scaling is definitely not an issue. The MX can store millions of FIB routing entries as well as a million MAC addresses. As for (virtual) route reflectors, these scale today to tens of millions of entries in RIB.

According to release note, there will be no more support for PBB on MX platform starting with 14.1 🙁

Please consult your local Juniper resource regarding PBB-EVPN support on MX.

It was really helpful!! thnx for the write-up.

Nice post. Thank you so much

We have two Data-Centers connected with 2*10G Dark Fiber, there is an application which is dependent of Layer2 so we have to extend our layer2 across data center but we are facing issues in layer2 like STP and Arp-Flooding. Edge devices are HP FlexFabric Switches, Can we use EVPN and limit these layer2 issues and also get advantage of Layer2 for application to work.

EVPN would be a good technology to use in your use case and would be an open standards approach to your problem. As far as I can see the HP Flexfabric switches only support EVPN/VXLAN which uses ARP Flooding to ensure reachability. EVPN/MPLS would be a better solution which is typically deployed on separate devices in the data center. I would recommend Juniper MX or Juniper EX9200 series.

Have Avaya SPB used in our network and we have brought 2 7k’s for our core data center switches. We want to have active active DC’s We have dark fiber for our DCI. Will EVPN work for us?

Very good post, thanks a lot! And I wish you all the best!